Artificial intelligence has come a long way in recent years. In fact, some are rightfully concerned that AI will be able to accomplish their job responsibilities more efficiently than they can.

AI models, such as ChatGPT, have already taken over in a number of fields. Even financial experts on Wall Street were starting to worry about job security. While ChatGPT is a useful tool, Wall Street analysts have no need to worry – yet. ChatGPT wouldn’t be able to pass the financial analyst exam that all qualified professionals have to ace.

Could ChatGPT Overtake the Finance Industry?

Hard work, perseverance, and an education from an esteemed university are all attributes that most financiers need to work on Wall Street. However, financial analysts have been worried about how ChatGPT would impact their industry by taking their jobs from right under them.

Artificial Intelligence Has Its Limits

A study conducted by JPMorgan researchers and university academics found that AI models such as ChatGPT and GPT-4 would not be able to pass all levels of the CFA exam.

Both AI models were created by OpenAI and have soared to the top of their class in terms of popularity and usability. When asked a series of questions that most financial analysts could answer, ChatGPT struggled to generate “complex financial reasoning.”

The Test Score Was not High Enough

The CFA exam consists of three parts and covers everything from securities analysis to portfolio management.

The test is a standard way of determining if someone would be qualified for a job as a financial analyst, and the results showed that ChatGPT was not as smart as it was perceived to be. “Based on estimated pass rates and average self-reported scores, we concluded that ChatGPT would likely not be able to pass the CFA level I and level II under all tested settings,” wrote the researchers in the study.

The Exam Is Challenging, to Say the Least

Passing the CFA exam is like earning a badge of honor in the finance industry.

Professional financial analysts can take years to complete all three parts of the comprehensive exam. Between hours of studying and prep time, finance gurus need to know the ins and outs of the industry if they ever want to work on Wall Street. A certification indicates that a financial analyst is knowledgeable and will likely be offered more lucrative career opportunities.

Few People Pass all 3 Levels of the Exam

Although ChatGPT technically failed, many people who take the test also don’t get a passing mark.

The CFA institute revealed that only 37% of those who took the first level of the exam in February 2023 passed. Level two of the exam in May saw 52% of test-takers pass, while only 47% of people taking the level three test in August passed. ChatGPT is one of the many candidates to take the test and not meet the requirements.

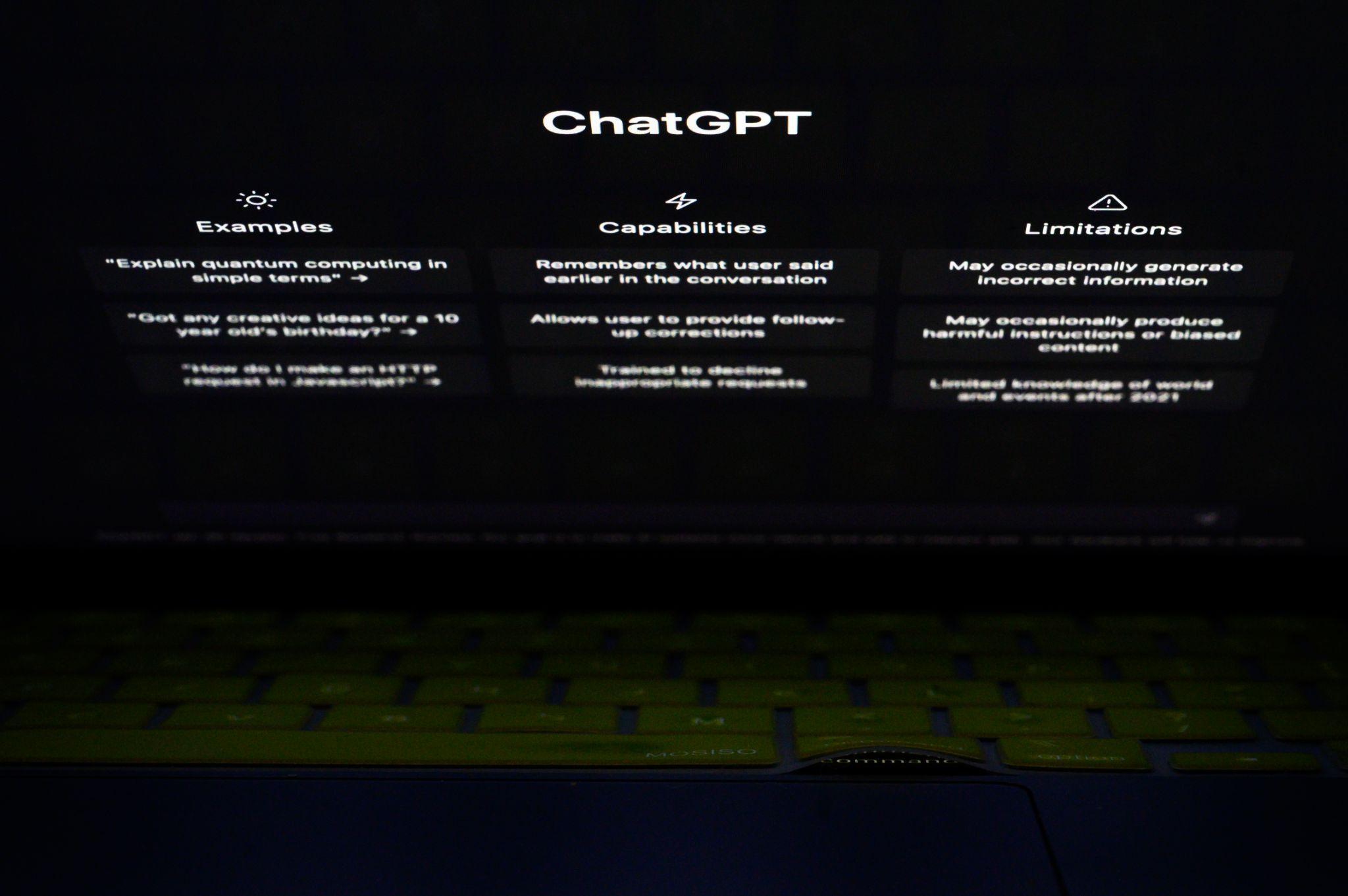

ChatGPT Got Confused by Multiple-Choice Questions

ChatGPT was asked a series of multiple-choice questions during the first phase of the exam.

While the AI model is revered for its vast wisdom, it had trouble deciphering input. ChatGPT made various “knowledge-based errors” according to the study. Researchers said that the “errors where the model lacks critical knowledge required to answer the question.” GPT-4, the upgraded paid model of ChatGPT, made similar errors but on a smaller scale.

GPT-4 Is the Superior AI Model

While the first level of the exam is typically more difficult than the second, the OpenAI models both did worse on the second portion.

Phase two of the test is said to require “more analysis and interpretation of information” on equity investments, financial reporting, and beyond. Unsurprisingly, GPT-4 performed better on the exam compared to ChatGPT. GPT-4 managed to pass the first two levels of the exam, while ChatGPT couldn’t make it beyond the first section. “GPT-4 would have a decent chance of passing the CFA Level I and Level II if prompted,” said the study.

Wall Street Financiers Can Keep Their Jobs

ChatGPT has the incredible ability to process and manipulate the human language.

This vital skill can be applied in sentiment analysis or in summarizing reports. While language models such as ChatGPt and GPT-4 are still on the rise, the finance professionals on Wall Street aren’t in danger of losing their jobs to either AI tool.

Wall Street Banks Have Banned ChatGPT

Interestingly, several Wall Street banks have banned employee use of ChatGPT. Citigroup, Goldman Sachs and even JPMorgan have banned the OpenAI model from being used for business purposes.

For the banks of Wall Street, the ban on ChatGPT isn’t a judgment on the AI software’s capabilities. Instead, big banks are cracking down on ChatGPT use to keep sensitive information secure. The ban makes sense, as the finance industry enforces extra security measures to ensure that clients’ money is kept safe.

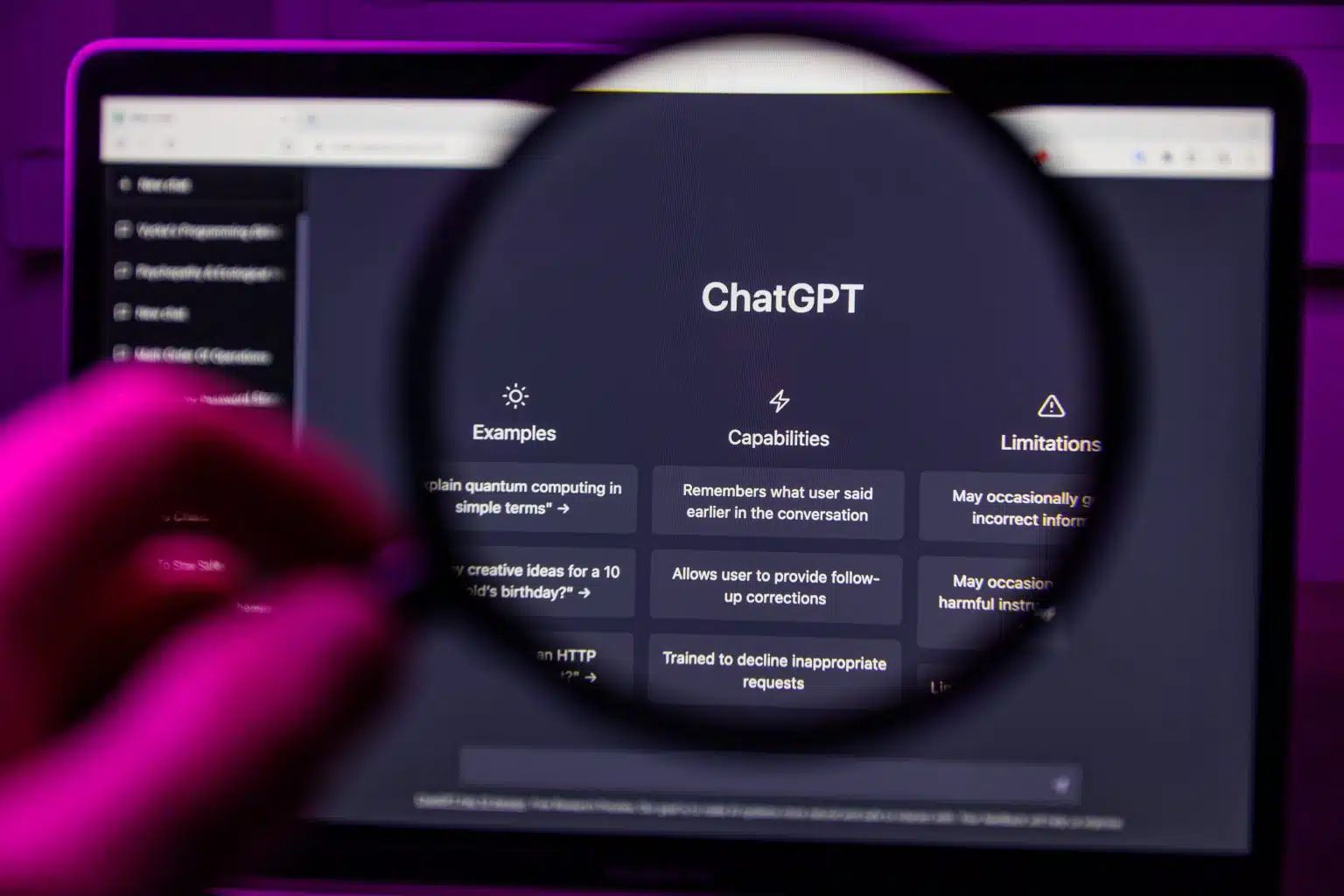

Accuracy and Reliability Are Common Concerns with ChatGPT

While ChatGPT is capable of comprehending a seemingly endless array of tasks and prompts, the AI model is far from foolproof.

A recent study from Stanford University found that ChatGPT’s ability to solve a simple math equation dropped significantly. The study determined that ChatGPT went from a 98% accuracy rate to an astonishing 2% accuracy rate over the course of a few months. ChatGPT was tested on “solving math problems, answering sensitive questions, generating software code, and visual reasoning,” according to the study.

ChatGPT Will Evolve and Improve over Time

Not only did ChatGPT get many answers wrong, but it also failed to show how it reached incorrect conclusions.

James Zuo, a computer science professor at Stanford and one of the study’s co-authors, got candid about educating ChatGPT to deliver correct answers to simple conundrums. “It’s sort of like when we’re teaching human students,” he said. “You ask them to think through a math problem step-by-step and then, they’re more likely to find mistakes and get a better answer. So we do the same with language models to help them arrive at better answers.”