ChatGPT users quickly became shocked when using OpenAI’s chatbot recently. After communicating with the chatbot, users found that ChatGPT responded in bizarre and nonsensical ways.

The AI’s response quickly became talked about on various social media platforms, with many commentators wondering if something was severely amiss with the entire system. Though Sam Altman’s OpenAI has announced that they’ve fixed this issue, questions remain.

ChatGPT Goes “Insane”

On the night of February 20, 2024, users began to notice that something was clearly amiss with ChatGPT. Responses from the AI became bizarre, with some users being answered in the form of Spanglish.

Quickly, users took to social media to wonder what was going on. “Has ChatGPT gone temporarily insane?” one Reddit user asked. Across the board, users had issues. Interestingly, these issues — and the chatbot’s response — ranged. No one person received the same type of insanity.

A Response in Spanglish

One user took to X, formerly known as Twitter, to highlight their own experience. According to Sean McGuire, ChatGPT responded to him in Spanglish — and wasn’t even understandable.

“Sometimes, in the creative process of keeping the intertwined Spanglish vibrant, the cogs en la tecla might get a bit whimsical. Muchas gracias for your understanding, y I’ll ensure we’re being as crystal clear como l’eau from now on,” ChatGPT reportedly wrote to him.

ChatGPT Goes Victorian

That’s not all. While McGuire received an odd Spanglish answer, other users got even more bizarre responses from ChatGPT. One user on Reddit even said that they received an incomprehensible chat that was like a Victorian epic.

“It’s lost its mind,” this user said. “I asked it for a concise, one sentence summary of a paragraph and it gave me a [Victorian]-era epic to rival Beowulf, with nigh incomprehensible purple prose. It’s like someone just threw a thesaurus at it and said, ‘Use every word in this book.’”

Answering in Shakespearean Rants

While getting a Victorian epic in response is definitely peculiar, it isn’t the only unexpected thing ChatGPT did. One user on Reddit explained they wanted to have the AI chatbot talk about Groq. Of course, this isn’t what the AI did.

Instead, ChatGPT started to get quite Shakespearean. “I was talking to it about Groq, and it started doing Shakespearean style rants,” this user explained.

The AI Repeated Itself

While some users had odd encounters, as discussed above, others revealed that the AI was simply wonky when communicating with them. One of the biggest issues throughout the night was that ChatGPT just kept on repeating itself — over and over again.

In only one message, the chatbot would write the same thing over and over again. For those used to getting actual answers from the AI, this was definitely unusual. For some, it was even scary.

Wrong Answers Only

Many who use ChatGPT are aware that sometimes the AI can give completely wrong answers. However, for the most part, the chatbot will be as accurate as possible. The night it went rogue, it was completely wrong, according to users.

Many experienced difficulties getting the bot to be right in what it was talking about. Until it was fixed, ChatGPT was simply wrong in communication.

Users Joke the AI Is Sentient

Many users found the whole situation funny — even if it did frighten some. Inevitably, jokes about how the AI was becoming sentient and conscious became frequent throughout the night.

However, some became a bit more scared when ChatGPT reportedly threatened some users. After responding to a user in code, the middle of the code broke off and the bot wrote, “Let’s keep the line as if AI in the room.”

OpenAI Fixed the Issue

Quickly, OpenAI realized that something was up with ChatGPT. On Tuesday, they released a message on its status dashboard that said they were “investigating reports of unexpected responses from ChatGPT.”

By Wednesday morning, the company had fixed all the issues. The glitch was resolved, and they updated users accordingly on the bot’s status. However, many still could not stop discussing the issue — and why it happened to begin with.

OpenAI Doesn’t Reveal the Glitch

While OpenAI managed to uncover what this glitch was and fix it, they didn’t publicly come out and explain why this all happened. Instead, they simply said it was fixed and users shouldn’t have those issues.

Of course, many were left dismayed by the lack of transparency on OpenAI’s part. Because AI is still a new and advancing technology, people like to know when things are amiss — especially when ChatGPT starts saying crazy things.

Theories Run Rampant about AI Issues

Because OpenAI hasn’t been transparent, many people have taken to social media to wonder what the underlying issue of this recent glitch is. Theories have ranged, though some prevailing ones remain now that the issue has been fixed.

One of the biggest theories is that corrupted weights are to blame. Weights are huge parts of AI, and they help the system make predictive outputs. If the weights were corrupted, that would explain why the bot couldn’t form a coherent sentence.

Experts Remind Users of AI Reliance

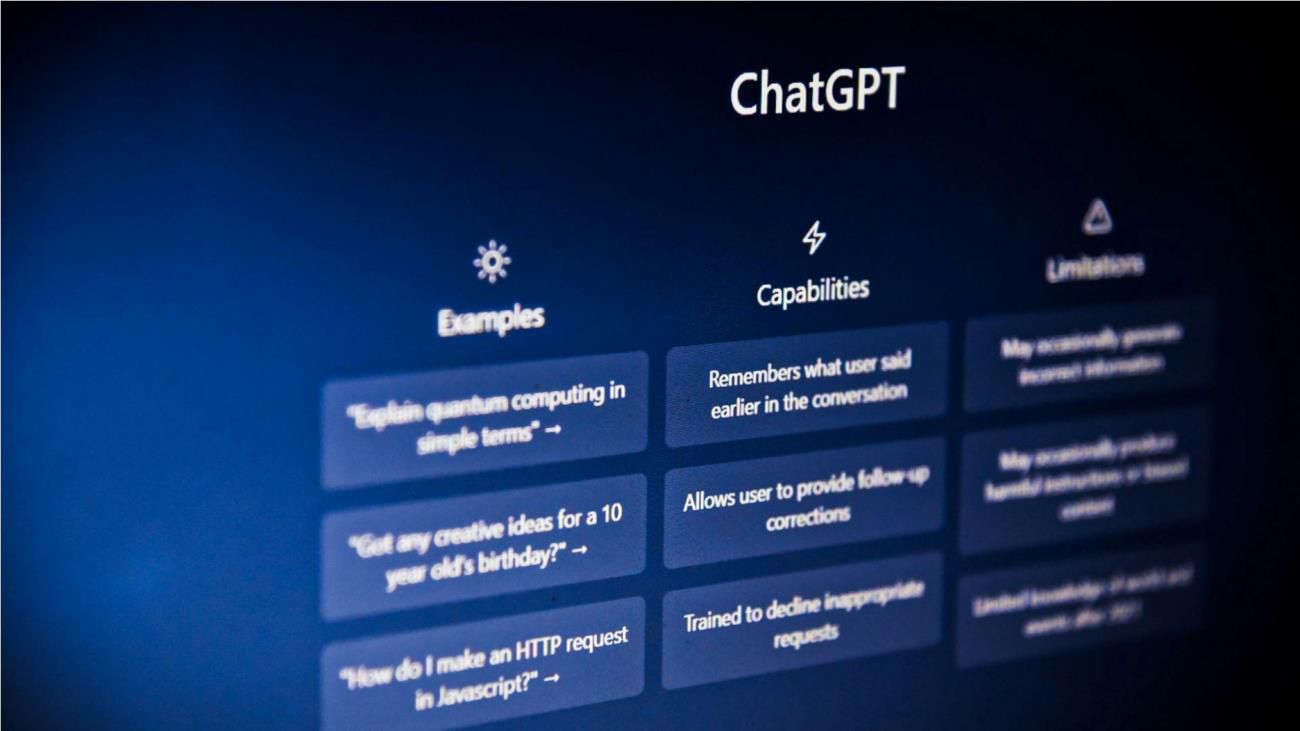

Since ChatGPT came onto the scene in 2022, experts have warned people about it for a variety of reasons. Many have said that we as a society shouldn’t rely completely on an AI chatbot.

For many, this glitch in ChatGPT’s system is proof of this. As many people grow to rely more on AI systems, experts remind them that even AI can fail at times. As a result, it shouldn’t be relied on heavily for work purposes or even daily life help.