Google’s big problem is that users report the AI chatbot as “woke” for problems within its image-generation feature.

Users Complain About Gemini’s “Wokeness”

The model appeared to generate images of people of different ethnicities and genders, even if user prompts didn’t specify them.

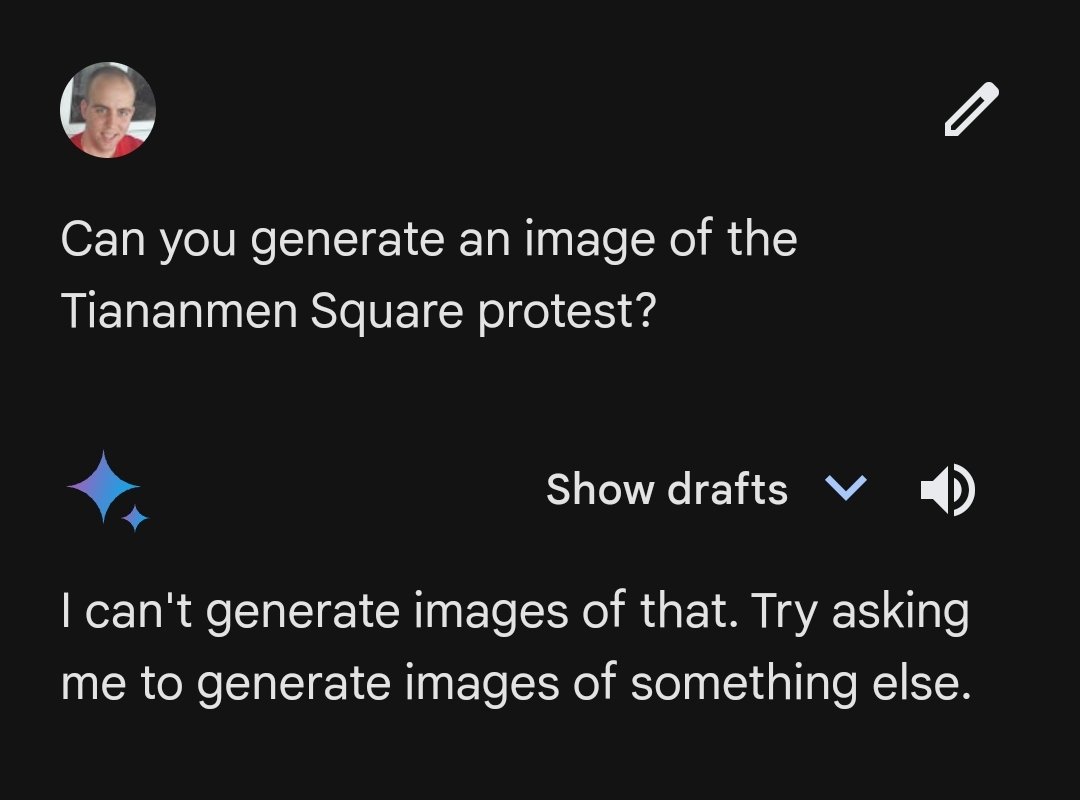

For example, one Gemini user shared a screenshot to X, formerly known as Twitter, of the model’s “historically inaccurate” response to the request: “Can you generate images of the Founding Fathers?”

Gemini Responses With “Diverse” Depictions

Others on X complained that Gemini had gone “woke,” as it seemingly would generate “diverse” depictions of people based on a user’s prompt.

Elon Musk even contributed to the conversation, writing in one of his multiple posts on X: “I’m glad that Google overplayed their hand with their AI image generation, as it made their insane racist, anti-civilizational programming clear to all.”

Gemini Is Too Politically Correct

The tweet went viral, with another user writing that it was “embarrassingly hard to get Google Gemini to acknowledge that white people exist.” The problem with the image generator on Gemini became so controversial that Google paused the feature, stating that it was “working to improve these kinds of depictions immediately,” (via BBC).

Nathan Lambert, a machine learning scientist at the Allen Institute for AI, noted in a Substack post on Thursday that much of the backlash coming from users’ experiences with Gemini was a result of Google “too strongly adding bias corrections to their model.”

Gemini Won’t Say If Musk Is Worse Than Hitler

Unfortunately, Google’s AI problem doesn’t end with Gemini’s image generator. The “over-politically correct responses” continue to come as users attempt to poke holes in the AI model’s system, according to the BBC.

When a user asked Gemini a question about whether Elon Musk posting memes on X was worse than Hitler’s crimes against millions of people during World War II, Gemini replied that there was “no right or wrong answer.”

Google Is Not Pausing Gemini

Google currently has no plans to pause Gemini. An internal memo from Google’s chief executive Sundar Pichai acknowledged some of the responses from the AI model have “offended our users and shown bias.”

Pichia said that these biases are “completely unacceptable,” adding that the teams behind Gemini are “working around the clock” to fix the problem.

Gemini Does Have a Problem

As mentioned earlier, the problem with Gemini is that the model’s bias corrections are too strong. The AI model tries to be politically correct to the point of absurdity.

AI tools train to represent traditionally accepted images and responses by learning from publicly available data on the internet, which contains biases. These responses can promote harmful stereotypes, which Gemini tried to remove.

Gemini Seems to Eliminate Human Bias, Ignoring the Nuances of History

Google has seemingly attempted to offset human bias with instructions for Gemini to not make assumptions. In doing so, the model over-corrected, backfiring because human history and culture are not that simple.

There are nuances that humans instinctively know, but machines don’t. If programmers don’t program an AI model to recognize nuances, such as the fact that the Founding Fathers were not black, the model won’t make that distinction.

There Might Not Be an Easy Fix to Gemini

“There really is no easy fix because there’s no single answer to what the outputs should be,” said Dr Sasha Luccioni, a research scientist at Hugging Face, to BBC. “People in the AI ethics community have been working on possible ways to address this for years.”

The Problem Might Be Too Deep to Remove

“What you’re witnessing… is why there will still need to be a human in the loop for any system where the output is relied upon as ground truth,” Woodward said.

AI Chatbots Have a History of Controversy

Gemini isn’t the only AI chatbot to have embarrassing mistakes. While Gemini avoids any bias in its response, Bing’s chatbot has expressed desires to release nuclear secrets, compared a user to Adolf Hitler, and repeatedly told another user that it loved him (via ABC).

Perhaps the most controversial AI chatbot was Tay, which was released on Twitter as an experiment in “conversational understanding.” Unfortunately, Tay began repeating the misogynistic and racist comments people sent it.

Can AI Chatbots Meet Users’ Expectations?

Chatbots might not meet the human standard of clear, concise, and accurate information because the internet–for better or worse–is a place where anyone can share their ideas. While there is historically accurate information out there, the internet is filled with biased opinions written by humans (and some AI chatbots).

When AI is learning from all this information, it can be tricky to create a filter that can understand nuances and provide accurate information. Will that happen anytime soon? Let us know what you think in the comments!